So this week we've covered motion blur in games, which is pretty interesting. I've always had an idea but never really understood how it was properly done. When objects move fast in a scene, or when the viewer camera moves at a quick pace objects in scene become blurry. When you record something with a camera and quickly rotate it, the image will have a quick horizontal blur effect.

This is because when a camera captures incoming light, depending on the shutter speed, the image sensor will be exposed to light for a period of time. When an object moves quick enough, you are essentially exposing the camera to multiple frames which become blended together and averaged. The objects or pixels that remain roughly in the same position become clearer than the rest when averaged.

How do we do it in games?

- Old fasion way.

Simulate real life, create a buffer that takes in multiple frames over a period of time and average it. Last frame will have the highest weight for blending. It's basically emulating how a normal camera would function, we record a number of frames of an interval, and slowly over time each frame will become less visible. Although practical, this method is obviously very inefficient and can be slow as a number of frames need to be rendered.

- Modern way

We have something called motion or velocity vectors, and we basically take a few things into account. In the first pass we extract each pixel position from the current scene, then we take those positions, transform it from screen space back to world space with the inverted camera view projection matrix, then we use the previous camera view projection matrix to get the pixel at the previous frame's position. So we have 2 versions of the pixel, 1 in the last frame's position and the one in the current frame's position. We simply take the difference of the two and that would give us a velocity vector or the direction and length of the blur for that pixel.

I was really excited to try to implement this technique as it seemed pretty straight forward. I think this technique would really compliment out quick paced GDW game.

Unfortunately, this turned out to be a lot more difficult than I thought. My implementation looked awful and the screen jitters strangely. There was probably something wrong in transforming the pixel locations or related calculations. Oh well..

On the bright side though, I did come up with a makeshift simple motion blur shader that doesn't take the pixel position into account and does a cool sweep blur when you turn the camera quickly. It doesn't require 2 passes and looks pretty nice anyway.

Sunday, 23 March 2014

Sunday, 16 March 2014

UOIT Game Dev - Development Blog 8 - The Portal Challenge

So this week instead of another lecture, we were given a challenge. And that challenge was to create functioning portals from the game Portal in 1 hour and 30 minutes. This was a very interesting challenge since prior to that class I wasn't able to successfully render FBO textures to 3D quads.

So we formed a group of 4 and began to brainstorm thoughts. First thing was to get an actual quad in there, so all I did was model a plane in Maya and imported it into my game. Then we tried to render "something" onto that plane, we wanted to have the quad display the scene from our camera's perspective.

What I did next was use my HDR shader class to render the scene to an FBO texture. I drew the scene in the first pass to get the scene texture, then render it again with the quad's texture replaced with the FBO texture.

What happened next was weird. Although we were able to render something on the quad, the texture looked really stretched, all we could see were lines of colour. It took us about 10 minutes to figure out what was wrong and it turned out to be the way we declared the texture parameters for our FBO texture. I replaced the parameters with the same ones we used to load our texture for the models and it worked flawlessly.

So with that out of the way we wanted 2 portals with each of them displaying the scene. This was really simple as we simply repeated step1.

Now how do we make it so one portal displays what ever the other portal is looking at? hmm....

Well this requires more passes and saved camera transformations.

We saved the initial camera matrix prior to rendering, took our camera and set it up on Portal A's position and view then rendered the scene. We did the same with Portal B and now there are 2 scene textures available rendered at 2 different locations.

All we did for the final render pass was apply Portal B's scene texture to Portal A and vice versa.

This pretty much did the trick, however we still didn't have parallax working so it seemed strange to have the portal display a static image.

But luckily this was an easy fix, we simply took the directions from the camera to each of the portal positions, found out the angle of the direction, then rotated it about 180 degrees to face the player. This was the final tweak that allowed us to come up with a functioning portal (other than the actual physics part lol).

So we formed a group of 4 and began to brainstorm thoughts. First thing was to get an actual quad in there, so all I did was model a plane in Maya and imported it into my game. Then we tried to render "something" onto that plane, we wanted to have the quad display the scene from our camera's perspective.

What I did next was use my HDR shader class to render the scene to an FBO texture. I drew the scene in the first pass to get the scene texture, then render it again with the quad's texture replaced with the FBO texture.

What happened next was weird. Although we were able to render something on the quad, the texture looked really stretched, all we could see were lines of colour. It took us about 10 minutes to figure out what was wrong and it turned out to be the way we declared the texture parameters for our FBO texture. I replaced the parameters with the same ones we used to load our texture for the models and it worked flawlessly.

So with that out of the way we wanted 2 portals with each of them displaying the scene. This was really simple as we simply repeated step1.

Now how do we make it so one portal displays what ever the other portal is looking at? hmm....

Well this requires more passes and saved camera transformations.

We saved the initial camera matrix prior to rendering, took our camera and set it up on Portal A's position and view then rendered the scene. We did the same with Portal B and now there are 2 scene textures available rendered at 2 different locations.

All we did for the final render pass was apply Portal B's scene texture to Portal A and vice versa.

This pretty much did the trick, however we still didn't have parallax working so it seemed strange to have the portal display a static image.

But luckily this was an easy fix, we simply took the directions from the camera to each of the portal positions, found out the angle of the direction, then rotated it about 180 degrees to face the player. This was the final tweak that allowed us to come up with a functioning portal (other than the actual physics part lol).

On the development side, I've finally decided to implement tangent space normal mapping. It added a lot more detail to our map and looks pretty nice. Also, Gimp's normal mapping plugin is amazing and pretty much allowed me to make normal maps in less than a minute.

Well, that's all for this week. Our game is near beta, we should have all of the functionality done by next week.

Sunday, 9 March 2014

UOIT Game Dev - Development Blog 7 - Road to Alpha

The progress so far...

After all of the assignments and midterms being thrown at us in the third month, I have finally found some time to work on our game once again. There is roughly 3 weeks left and a lot of things still need to be implemented. Thankfully, we have done the majority of the gameplay in the past week and have a lot of the core mechanics working as well.

I have finished up our night level "Nightfall" which should be the second map the player plays during the campaign.

The enemies are properly implemented with path following and chase behaviours. I have implemented a Game Manager class that will take care of managing things like (enemy spawn locations, wave wait time, wave count, when to end the wave and camera). Each wave, the game manager will spawn more enemies with higher stats and will also determine how many waves until the player is completed the level.

In terms of mechanics for our character, I've decided to take some inspiration from one of my favourite childhood games "GunZ: the duel". In it the player can do things like wall run and roll which makes the gameplay really fast paced and fun. So now our character can roll in any direction and also run a long walls which is pretty neat.

In addition, we have added turrets to our level, the player may spawn turrets to aid in battle once they have the required amount of resources. These turrets will aim and shoot at nearby enemies when intersecting the attack radius.

Lastly for aesthetic purposes, I have made use of our particle system to create a volumetric fog-like effect. It worked out pretty nicely and makes the overall map look a lot better.

I have finished up our night level "Nightfall" which should be the second map the player plays during the campaign.

The enemies are properly implemented with path following and chase behaviours. I have implemented a Game Manager class that will take care of managing things like (enemy spawn locations, wave wait time, wave count, when to end the wave and camera). Each wave, the game manager will spawn more enemies with higher stats and will also determine how many waves until the player is completed the level.

In terms of mechanics for our character, I've decided to take some inspiration from one of my favourite childhood games "GunZ: the duel". In it the player can do things like wall run and roll which makes the gameplay really fast paced and fun. So now our character can roll in any direction and also run a long walls which is pretty neat.

In addition, we have added turrets to our level, the player may spawn turrets to aid in battle once they have the required amount of resources. These turrets will aim and shoot at nearby enemies when intersecting the attack radius.

Lastly for aesthetic purposes, I have made use of our particle system to create a volumetric fog-like effect. It worked out pretty nicely and makes the overall map look a lot better.

We now have a few things left to do:

- get our card system working

- add in the additional enemies and their behaviours

- add in the 2 last weapons

- have a function generator or nexus for the player to defend

- work on the other levels (shouldn't be difficult once we have all of the mechanics in place)

- tighten up the graphics on level 3 https://www.youtube.com/watch?v=BRWvfMLl4ho

Sunday, 16 February 2014

UOIT Game Dev - Development Blog 6 - Size Matters

This will be different from my usual blog posts about computer graphics, instead it will be more of a journal entry about our team's experience developing a 48 hour game for The Great Canadian Appathon, and the things I've learned.

When the theme "Fantasy" was announced, I had no clue what to think about. Well, other than dragons, fairies, mage and knights, there was very little I can come up with for a mobile game. So after class we headed over to Jord's place to figure out what we were going to make.

There were a couple of ideas thrown around, very indie ideas like entering a white spacious room and what not. After a couple of minutes we decided to brain storm interesting mechanics rather than story. Ok so the theme was fantasy and we knew the majority of the teams were going to make quest games, or games where you fight a dragon, but we wanted to come up with some mechanic that very few games have.

We also took into consideration that this was going to be played on mobile devices, so we later agreed on some sort of infinite runner. Infinite runners are quite popular, I personally enjoyed games like "Robot Unicorn Attack", "Subway Surf" and "Temple Run". Also, these type of games really allow us to focus on the mechanics while only making 1 continuous level, so the scope was pretty easy to follow. We essentially wanted to make a very simple game with 1 fun mechanic.

After 20 minutes of brainstorming, Josh came up with the brilliant idea of scaling the level while running. Both me and Jord thought he was talking about scaling the character instead, however we all loved that idea too. We thought of ways one can change the dynamics of the game when the character is in 3 different size states:

Small: Jump highest, fit through tunnels, fall slower

Regular: jump 1 block high, fall at normal speed

Large: break through blocks, walk over small holes, fall faster

That's all we thought of really, but we were certain that there was at least a couple of games out there that have this main mechanic already. To our surprise, there weren't any endless runner we could find or think of that had it.

So instantly we knew what we wanted to make, an infinite runner where you can scale the character. Simple.

The host along with the founder Ray Sharma gave an introduction speech and showed a brief history video about the The Great Canadian Appathon.

After some cheers and applause, it was time for us 3 teams to present.

Both teams from UofT had pretty interesting games

Team Last Minute's "Wild Fire" is a game where you have to utilize the touch screen to drag the villagers away from the dragons and into a safe home.

Team Cool Beans with "Kingdom Crushers" had a cool concept where you mine 3 different types of ores with 3 different types of miners, each miner must be used with their specific type of rock earning resource in order to defend your castle.

Then it was our turn to present our game "Size Matters".

After our 5 minute pitch, the judges questioned about why we had a giant worm chasing a mouse... There wasn't really a good explanation behind that random design decision, so we did our best to answer and laughed it off. We sat back down and the judges proceeded to leave the room to decide on the winners. This was the most anticipating moment of the night.

Tony Clement finally came on stage and began delivering a speech.

It wasn't long until we were up there with the President of the Treasury Board holding a big check for 25 grand. It all occurred really fast and I was still in shock about everything that had happened. I don't think I've ever felt this much success before, and I really couldn't have done it without our team.

It's amazing how something you can make in 48 hours can bring you so much and take you so far. 2 days was all it took, and the amount of things I've learned from this and the Game Jam alone is unimaginable.

Thank you for reading :)

When the theme "Fantasy" was announced, I had no clue what to think about. Well, other than dragons, fairies, mage and knights, there was very little I can come up with for a mobile game. So after class we headed over to Jord's place to figure out what we were going to make.

There were a couple of ideas thrown around, very indie ideas like entering a white spacious room and what not. After a couple of minutes we decided to brain storm interesting mechanics rather than story. Ok so the theme was fantasy and we knew the majority of the teams were going to make quest games, or games where you fight a dragon, but we wanted to come up with some mechanic that very few games have.

We also took into consideration that this was going to be played on mobile devices, so we later agreed on some sort of infinite runner. Infinite runners are quite popular, I personally enjoyed games like "Robot Unicorn Attack", "Subway Surf" and "Temple Run". Also, these type of games really allow us to focus on the mechanics while only making 1 continuous level, so the scope was pretty easy to follow. We essentially wanted to make a very simple game with 1 fun mechanic.

After 20 minutes of brainstorming, Josh came up with the brilliant idea of scaling the level while running. Both me and Jord thought he was talking about scaling the character instead, however we all loved that idea too. We thought of ways one can change the dynamics of the game when the character is in 3 different size states:

Small: Jump highest, fit through tunnels, fall slower

Regular: jump 1 block high, fall at normal speed

Large: break through blocks, walk over small holes, fall faster

That's all we thought of really, but we were certain that there was at least a couple of games out there that have this main mechanic already. To our surprise, there weren't any endless runner we could find or think of that had it.

So instantly we knew what we wanted to make, an infinite runner where you can scale the character. Simple.

Soon after, we headed over to the room where they were hosting the event. After a short introduction, Jord and Josh went ahead and discussed about art styles. It wasn't too long until Harry showed up to join our team since his previous group had lacked programmers.

The day was pretty productive. However, since I was new to programming in Unity, I had run into a bunch of annoyances and script errors. But by the end of the day I've managed to get a basic random level sequence generator working.

The numbers represent the index for the different types of blocks that will be made to scroll while the character is running.

On the second day, I woke up and found that Jord had finished the scaling mechanic and was able to run it on the phone. The full character running animation was also completed. After implementing my random level sequencing along with some basic placeholder tiles, I began to play with the mechanics.

I discovered right away the amount dynamics this mechanic can offer. For instance I would sometimes jump too early and would normally crash into a block, but if I change the mouse's size to the smallest amount at the last second I would still be able to make the jump. Just playing around with the sizing mechanic alone felt pretty enjoyable and I immediately believed that this game had a lot of potential.

The third day we pretty much had almost all of our assets completed, all was needed was completing the tile arrangements and level design. We wanted to get all of the assets and functionality in as soon as possible to allow us more time for polishing and play testing.

During this process there was a lot of reiteration done changing the tile arrangements. We didn't want the levels to feel too difficult nor did we want them to be too simple. This was probably the most tedious process, not only did I have to arrange tiles in a way that allowed difficulty to be balanced but I also had to make sure that every single set can be randomly sequenced behind one another without making it impossible for the player to surpass.

A couple of hours have passed, we've managed to get a working game with a points system. There was about 2-3 hours left before the submission deadline and we immediately ported what we had over to the phone for others to test out. The response was quite positive, people seemed to like the idea and learned about the mechanic rather quickly.

We even had the founder of XMG Studio Ray Sharma come play our game in the last hour during his surprising visit at UOIT (What are the odds? lol). I remember him saying that our game was one of the better ones he had played.

After further polishing and adding in all of the menus, we finally submitted the game and celebrated. It felt good making a whole game in only 48 hours, and we were pretty proud of what we've accomplished.

It wasn't until a week later until we found out about making the top 25 list. 3 UOIT teams have managed to make it in total which was pretty amazing. We were all excited and looked forward to the following week where they will reveal the top 15.

Thursday of the following week, I was pretty anxious to find out if we have made it to the top 15 list. That alone would have satisfied me at the time since the 15 teams get a special invite to the "Canadian Open Data Experience" (CODE). Also, making it that far would already be a great achievement to put on our resumes.

It took a while for XMG to release the list, but as soon as they did I immediately began to look for our Team. I scrolled down to finally find our name and was relieved to see it on there.

...After a closer glance, I was surprised to find that we had not only made the top 15, but we were among the 3 finalists! I really couldn't believe it at the time, it was quite shocking and I didn't really think we'd make it this far. It was one of the most exciting moments I've had in a long time. My other group mates were in joy of finding out the news and we were all super excited.

Next week...

We prepared for our 5 minute pitch presentation about the game and headed straight to Toronto. It was long ride there but we made it.

The venue was like some sort of VIP party, there were XMG employees, industry people, photographers and people from the media. Phones and tablets were on display with the top 15 games on it for people to play. Free food and drinks were offered to everyone and there was also a DJ playing music.

It was simply amazing.

We also met two other students from UOIT including Kevin. His game won an award for most challenging game play.

After a good hour of networking, everybody headed over to the other room in order to prepare for the top 3 teams to pitch their games.

After some cheers and applause, it was time for us 3 teams to present.

Both teams from UofT had pretty interesting games

Team Last Minute's "Wild Fire" is a game where you have to utilize the touch screen to drag the villagers away from the dragons and into a safe home.

Team Cool Beans with "Kingdom Crushers" had a cool concept where you mine 3 different types of ores with 3 different types of miners, each miner must be used with their specific type of rock earning resource in order to defend your castle.

Then it was our turn to present our game "Size Matters".

After our 5 minute pitch, the judges questioned about why we had a giant worm chasing a mouse... There wasn't really a good explanation behind that random design decision, so we did our best to answer and laughed it off. We sat back down and the judges proceeded to leave the room to decide on the winners. This was the most anticipating moment of the night.

Tony Clement finally came on stage and began delivering a speech.

It wasn't long until we were up there with the President of the Treasury Board holding a big check for 25 grand. It all occurred really fast and I was still in shock about everything that had happened. I don't think I've ever felt this much success before, and I really couldn't have done it without our team.

It's amazing how something you can make in 48 hours can bring you so much and take you so far. 2 days was all it took, and the amount of things I've learned from this and the Game Jam alone is unimaginable.

Thank you for reading :)

Saturday, 8 February 2014

UOIT Game Dev - Development Blog 5 - Shadows

In terms of visuals, shadows play a very important role in games. It can make a very simple yet dull scene look beautiful. It adds depth, allowing the player to see how far or tall an object is, and it can also give the player a sense of elevation by looking at the shadow cast by a floating object.

(http://dragengine.rptd.ch/wiki/lib/exe/fetch.php/dragengine:modules:opengl:imprshalig.png)

(http://gameangst.com/wp-content/uploads/2010/01/psm0.jpg)

(http://www.geeks3d.com/public/jegx/200910/hd5770_shadow_mapping_near_light_8192x8192.jpg)

I can go on and on, but the main point is that shadows add so much more detail and realism to a game which can make all the difference visually. So there are a few ways games do shadows in real time:

Screen Space Ambient Occlusion

(https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEiawKRcIOUzsZzdzS0OOXQszuMVE29ZStvvNj7hEttRa6ZFiuWqwNd5vww13Y1pBQGZpC3pz3l3N58YhOFC1DYXlqZQTTH7CUwS_N4PbYGB6p8l01Zw50rz-I4z9_HJr_gmnp-3WeANTfM/s1600/AO.jpg)

This technique basically takes the rendered depth scene, samples it and darkens areas in which objects occlude one another. Essentially, if an object or mesh is close to one another, then light around that area will bounce an extra amount of times losing intensity creating a slight darkness. So we would need to take a pixel of the rendered screen, sample the neighboring pixels (occluders) rotate them, then reflect them around a normal texture. This technique is quite effective in adding detail to a scene while sampling 16 or less times per pixel.

Radiosity

(http://upload.wikimedia.org/wikipedia/commons/5/55/Radiosity_Comparison.jpg)

While global illumination looks stunning, it simply can't be done in real time due to the number of rays needed to compute such a scene. Radiosity can be a solution as it minimizes the amount of computation needed to create a decently lit scene.

I think this link does a very good job explaining the algorithm.(http://freespace.virgin.net/hugo.elias/radiosity/radiosity.htm)

Basically, there are several passes to this technique, during each pass we have to account for all the polygons in the scene and look through their perspective. The more light it sees the more lit it will be, and after a few passes it can collect light from other lit polygons.

Shadow Mapping

This is probably the most widely used technique. Most easily done with direction lights, we take the perspective depth from the light's point of view creating a depth texture. This depth texture will be used to determine which pixels of the scene from the camera's point of view will be in shadow. During the scene pass we would have to convert each scene pixel to the coordinate from the light's point of view and finally determine if there's anything in front of it. This 2 pass algorithm gives us a realistic shadow projection from objects in the scene.

Previous tests

I've played with some shadow mapping in the past and I quickly realized of the limitations created by this algorithm. In the scene above you can see 2 things right away; the shadow is a bit pixelated and there is a cut off point near the top of the image. This is because shadow maps are stored on a texture with a limited resolution. While it worked fine for a small area of the scene, 1 shadow map wouldn't work for a larger scene as individual shadows will lose a lot of detail and become fuzzy.

In the prototype submitted for last semester, I essentially clipped the light projection to the character's position so there would always be a crisp shadow no matter where he goes.

I don't know much about the cascaded shadows mapping yet but I assume it's something similar where you have a shadow projection clipped to each object then compute the final scene through multiple shadow maps.

Saturday, 1 February 2014

UOIT Game Dev - Development Blog 4 - HDR and frustrations with Deferred Shading

This week...

So we've been talking about blurring techniques and how they are applied in computer graphics. Conveniently some of the techniques were already mentioned in my previous blog so the material wasn't new to me, but what had really caught my attention was the down sampling technique.

It's a pretty neat trick. All you really need to do is scale down the image losing pixel information, then scale it back up creating a more pixelated/blurred version. I might give this a try sometime in the near future.

I've always had mixed feelings about HDR bloom. When used correctly, games can really benefit from it and look a lot brighter, however sometimes it can be too bright or too blurry (like the one below) which can outright distract the player ruining the experience.

But I'm really interested in figuring out how dynamic light exposure is done in games. When playing certain maps with a lot of daylight in Counter Strike Source, I would walk out of a dark area (like a tunnel) and my whole screen would be blinded by light for a split second, then it would go back to normal. Similarly, I can walk into a darker area and have my screen dimmed before adjusting to the darkness. This just seems so cool and natural, hopefully I will have some time in the future to implement this.

So we've been talking about blurring techniques and how they are applied in computer graphics. Conveniently some of the techniques were already mentioned in my previous blog so the material wasn't new to me, but what had really caught my attention was the down sampling technique.

(http://www.cg.cs.tu-bs.de/static/teaching/seminars/ss12/CG/webpages/Maximiliano_Bottazzi/images/BlurAwareHeader.png)

I've always had mixed feelings about HDR bloom. When used correctly, games can really benefit from it and look a lot brighter, however sometimes it can be too bright or too blurry (like the one below) which can outright distract the player ruining the experience.

(http://i.imgur.com/lwk8w.jpg)

But I'm really interested in figuring out how dynamic light exposure is done in games. When playing certain maps with a lot of daylight in Counter Strike Source, I would walk out of a dark area (like a tunnel) and my whole screen would be blinded by light for a split second, then it would go back to normal. Similarly, I can walk into a darker area and have my screen dimmed before adjusting to the darkness. This just seems so cool and natural, hopefully I will have some time in the future to implement this.

(http://in.hl-inside.ru/TrainHDR.jpg)

What I've been working on...

My main focus was trying to get multiple lights to render in our game. I've looked up a lighting technique called "Deferred shading" which was brought up in the first week or two of class. To be honest, I've never really understood this technique at first, calculating and rendering lights in a frame buffer really confused me.

But after reading a couple of web pages on the topic, I finally understood the concept. We essentially need to create something called the G buffer, which job is to store pixel data (position, color, normal, etc) from the rendered geometry. We then bind those textures in the light pass using the appropriate pixel data to calculate the light intensity. This is a lot more efficient since we are only calculating light data on the visible pixels in a frame.

So I created the G buffer and the passthrough shader to output the appropriate values:

No problems there, it works fine. So then I uniformed the textures into my light pass shader calculating a simple directional light.

It seems to work fine, the correct values are being passed over and the final result looked rather accurate. But there's 1 thing in particular that bothered me, and that was the noticeable drop in frame rate.

Why was this happening?

Isn't deferred shading suppose to be more efficient?

I've spent hours trying to figure what was wrong and the frame rate was simply unacceptable. While I was unable to fix this issue, I've discovered something in the geometry pass that was causing the lag.

fragPosition = position;

fragDiffuse = texture(myTextureSampler, UV).xyz;

fragNormal = normalize(normal);

for some reason, outputting the position and normal values creates a strain on processing. I simply commented out the following

//fragPosition = position;

fragDiffuse = texture(myTextureSampler, UV).xyz;

//fragNormal = normalize(normal);

and it ran smooth, but of course I wasn't able to compute the light since I had missing values. After a few more hours I finally gave up and decided to go with forward rendering for the multiple lights. It's really unfortunate but I guess I will seek help next week in hopes of resolving the issue.

Right before writing this blog, I spent the entire day working on the lighting for our night level. This is what I have so far:

It mainly consist of point lights and glowing objects for now. I plan on adding a lot more to the scene next week, hopefully it will look a lot better by then.

Saturday, 25 January 2014

UOIT Game Dev - Development Blog 3 - Shading techniques ...and Glow!

What we've covered...

Alright, so this week we've gone through the following shading techniques:

Emissive: emits light or simulates light originating from an object.

Diffuse: a light ray that is cast and reflected from a surface and scattered into different directions

Ambient: Basically, a really simply hack to simulate light bouncing around or "light that is always there". It is lighting that lights up the model even when there are no lights around.

Specular: it shows a bright spot of light simulating light reflection. It is often used to give surfaces a metallic or reflective look.

Alright, so this week we've gone through the following shading techniques:

Emissive: emits light or simulates light originating from an object.

(http://udn.epicgames.com/Three/rsrc/Three/Lightmass/SmallMeshAreaLights.jpg)

(http://www.directxtutorial.com/Lessons/9/B-D3DGettingStarted/3/17.png)

Ambient: Basically, a really simply hack to simulate light bouncing around or "light that is always there". It is lighting that lights up the model even when there are no lights around.

(http://zach.in.tu-clausthal.de/teaching/cg_literatur/glsl_tutorial/images/dirdiffonlyvert.gif)

Specular: it shows a bright spot of light simulating light reflection. It is often used to give surfaces a metallic or reflective look.

(http://www.directxtutorial.com/Lessons/9/B-D3DGettingStarted/3/19.png)

Also, the last 3 techniques are combined to make the "phong" shading model

(http://tomdalling.com/wp-content/uploads/800px-Phong_components_version_4.png)

By adding all of these components together, we get a nice reflection which is a basis for most lighting techniques.

The math...

To perform light calculations you'll need components like a vector's normal vector, light source position, view direction, reflected light vector (there's way more but these are just the most common ones).

Diffuse is done by taking the dot product of the normal and light direction vectors. This will give you an intensity from 0 to 1 which you multiply with your final color. values closer to 0 will give a darker color while values closer to 1 will give you a brighter color.

so it should look like : DiffuseLight = N dot L * DiffuseIntensity;

and of course you can factor in a intensity variable shown above to adjust how bright it would be.

Specular is done by obtaining the reflected light vector then getting the dot product of the view direction and reflected light vector. Once our view direction gets closer to the reflected light, the value becomes closet to 1, which is how we get that spot of light on a surface.

SpecularLight = pow((R dot V), SpecularShininess);

in this one you can factor in a shininess variable, and set the specular value to the power of a certain value to intensify it.

Ambient is the easiest of them all, all you'll need to do is hard code in a set color value like (0.1,0.1,0.1) and you're done! this allows the object to be lit in areas where there is no light present.

AmbientLight = vec3(0.1,0.1,0.1);

then you just add them all together to get the final color.

FinalColor = AmbientLight + DiffuseLight + SpecularLight;

We've also covered toon/cel shading which is pretty interesting..

(http://dailyemerald.com/wp-content/uploads/2013/09/Wind-Waker-Windfall.jpg)

Toon shading isn't really difficult, you're just doing the same shading techniques like the ones mentioned earlier however the final color values are clamped into specified ranges, giving that cartoony effect.

Now back on the development side...

We really need nice glow and holographic effects for our game since it takes place in a vibrant night city.

I've done a bit of research and came across this article

(http://www.gamasutra.com/view/feature/2107/realtime_glow.php)

after reading it, I found it to be actually pretty simple. I've come up with a few ideas...

Here's what I did.

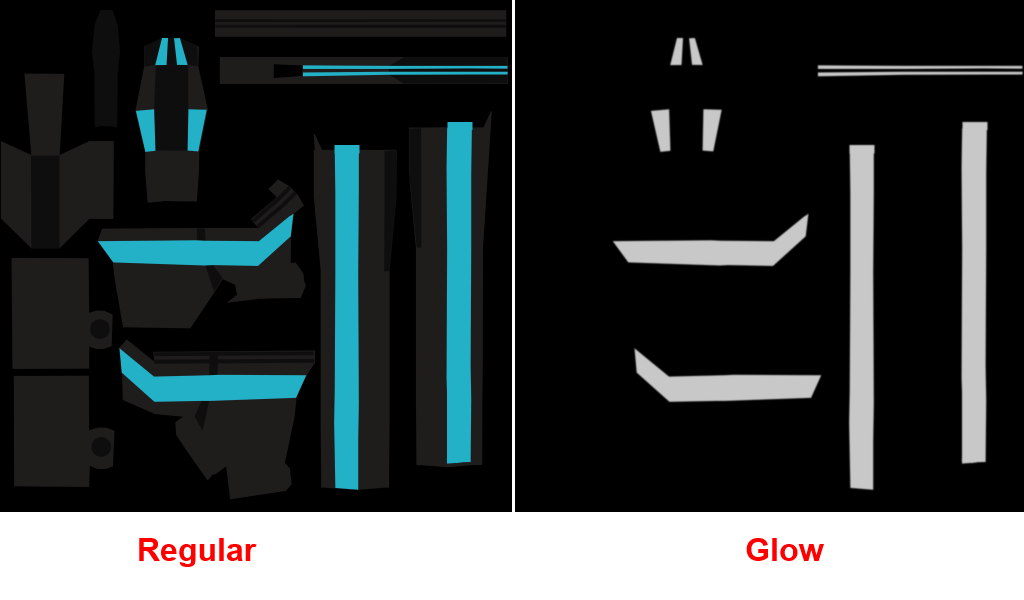

Have 2 different types of textures, 1 regular texture and 1 glow texture.

(Kevin Pang's shotgun texture)

(for glow texture, all black pixels won't glow)

Create multiple frame buffers to store the glow and blurring post processing effects.

This will need multiple draw passes

Pass 1: render all geometry with the glow texture applied

Pass 2: Ok now blur everything using gaussian blur (this step actually takes 2 passes to blur things horizontally then vertically)

Pass 4: Render objects normally with normal textures

Pass 5: Apply the final glow frame texture onto the regular scene frame and BAM

My group members including myself agree that it makes things pop a lot more, and I'm quite happy with this effect.

(hologram test lol)

I'll be looking forward to using this effect in my night city. Well, that's all for this week, later!

Subscribe to:

Posts (Atom)